How Google Crawls your Website’s AMP pages, JavaScript and AJAX

Last updated: March 6, 2021

A huge “step” forward in how Google “crawls” Ajax content! Google is the best in the world at crawling content within JavaScript and AJAX. However, they are still perfecting it. Do you find it’s a battleground getting your site crawled?

Google has changed its way of handling AJAX calls to web content. John Mueller of Google said they can be “on and off” so there are extra SEO tactics that search experts can engage to let Google know your crawl intent of content. Going forward, it is best not to rely on the way it used to be. Having an agile marketing process in place will help you navigate changes faster.

You can leverage insights discovered in your Google Search Console, and know better how to read and interpret them from answers and explanations in this article. Your Core Web Vitals reports reveal PageSpeed issues.

What Is Google Crawling and Indexing?

Crawling and indexing remain distinct tasks. Crawling is when Googlebot looks at all the content and code on a web page and analyzes it. Indexing is when that same page is eligible to be included and show up in Google’s search results. Since the Google Panda update, domain name importance has risen significantly. Your business growth from the online world depends on your web pages being crawled and indexed correctly.

Some businesses do all the work of creating great content and optimizing their site yet fail to get key content indexed. That’s why we recommend that your business planning sessions and strategy considers this upfront.

What is GoogleBot?

Googlebot is the search bot software used by Google, which collects documents from the web to build a searchable index for the Google Search engine.

Whether you are seeking to learn methods of the Google Crawler for earned search or paid search, SEO can improve search tactics with a correct understanding of GoogleBot.

GoogleBot is the arm of Google’s search engine that crawls your web pages and creates an index. It’s also known as a spider. GoogleBot uses machine learning to crawl every page you allow it to access and adds it to Google’s index where it can be retrieved and returned to match users’ search queries. Your efforts to clearly indicate to Google which pages on your website you want to be crawled and which ones you do not can seem like combat, too.

How do I know if my Site is in Google’s Index?

The Google Index information report in your Google Search Console will test a URL in your property. Its URL Inspection tool will reveal the URL’s current index status. You will need to enter the full URL to inspect it and gain an index status report.

You can use the Fetch as Google Tool to see how your site looks when crawled by Google. From this, site owners can take advantage of more granular options and can select how content is indexed on a page-by-page status. One example is the ability to see just how your pages appear with or without a snippet – in a cached version, which is an alternate version collected on Google’s servers in case the live page is not viewable at the moment.

Another way to check for the Index status of any site URL is by utilizing info: operator. Use the Google Chrome browser and enter info: URL in the navigation bar. This will trigger a Google display: Display Google’s cache of “example-domain-url”. Find web pages similar to “example-domain-url”.

How do Google Indexes work on JavaScript Sites?

When Google needs to crawl JavaScript sites, an additional stage is required that traditional HTML content doesn’t need. It is known as the rendering stage, which takes additional time. The indexing stage and rendering stage are separate phases, which lets Google index the non-JavaScript content first.

JavaScript is a more time-consuming process for Google to crawl and index. the reason is that it needs to be first downloaded, parsed, and then is executed.

How is JavaScript prerendering handled inside rendered output?

GoogleBot can pre-render JavaScript used inside the rendered output. The technology giant looks at prerendering JS from a user experience perspective. This makes it easier as it eliminates the need to remove JS from pre-rendered pages. If your site relies on JS to manage minor site content and layout updates but not AJAX requests, stay tuned to Martin Splitt, Google’s spokesman on advancing technology to handle such crawling/indexing cases.

How Important is Google’s Crawling and Indexing Process?

Getting your website crawled by Google and indexed correctly is the pivotal matter in Internet marketing success. It’s the entire starting point. You must have web crawling and the ability to be indexed to succeed. Without a sitemap upload into your website’s root folder, crawling can take a long time — maybe 24 hours or more to index a new blog post or deep website.

Most Internet surfers never realize the different steps you have taken to improve your site’s crawlability and indexation.

“The Google Search index contains hundreds of billions of webpages and is well over 100,000,000 gigabytes in size”, according to Google.

How to manage old assets for best crawling history?

Googlebot crawls various stale assets that are currently given a 404 status. Typically, old assets should be maintained until they cease being crawled. Eventually, Google will re-crawl the HTML content, assess new site assets, and update its crawler. We don’t recommend using 401 or 404 to manage old assets; this could result in ending up with broken renders. which is something that should be avoided. It happens at times when using Rails Asset Pipeline for caching.

Two important concepts you need to understand for Google to crawl your website are:

1. If you want your site crawled and indexed, then search engine spiders need to be able to view your site correctly.

2. There is a lot you can do to ensure that your website is crawled correctly by Google’s spiders.

A Web crawler, sometimes called a spider, spiderbot, or web spidering, and is often shortened to crawler, is an Internet bot that systematically browses the World Wide Web, typically for the purpose of Web indexing. You can help Google understand your content better and get it indexed in Google’s library if you add all the essential schema markup types.

New Insights on Syndicated Content and How Google Crawls AJAX

Google can now index ajax calls and it is important to understand what that means in Google Search results.

When John Mueller was asked in last Friday’s English Google Webmaster Central office-hours hangout how syndicated content and an ajax call are handled, his response was: “In the past, we have essentially ignored that. What could be done is using JS.” I find it fascinating what has both changed and what we can expect. You could indicate that you wish to render a page for indexing while having a dynamic title left out. If you want to exclude a part of a page, you can do so with the robots.txt file to indicate that wish. Keep your Accelerated Mobile Page’s content as close as possible to your desktop versions.

For example, a product details page with description, reviews, buttons, and answers (Q&A). That part of the syndicated call could be hidden; he would suggest moving that content within a separate directory within the site where you are aggregating this, so that could block aggregated content by your robot.txt file. This may avoid it looking like you are auto-generating content that doesn’t actually exist on the site.

“What we try to do is render the page as it would look in a browser. Look at the final results and use those results in search” added Mueller. If you just want only part of a page is not taken into account, than roboting the text can be done by WebMasters. Interestingly, even Mueller hinted that it can be tricky to indicate which AJAX content you don’t want to be parsed within a given page, while the rest of the page is parsed for AJAX content.

Google will not always crawl all of your JavaScript all the time. “It is still on and off but headed in the direction of more and more,” stated Mueller. It seems clear that Google’s developers are watching tests on how Google crawls AJAX and wants to index titles properly that is being injected with JavaScript and more consistently. It is not about “sneaking content to the user that isn’t being indexed”.

Manual maintenance of your website’s robots.txt file is still a good idea if you have specific content you do or don’t want to be crawled.

An Overview of How Google Crawls Websites

1. The first thing to know is that your website is always being crawled. Google has indicated that; “Googlebot shouldn’t access your site more than once every few seconds on average.” In other words, your site is always being crawled, provided your site is correctly set up to be available to crawlers. Google’s “crawl rate” means the speed of Googlebot’s requests; it is not about how often your website is crawled. Typically, businesses what more visibility, which comes in part from more freshness, relevant backlinks with authority, social shares and mentions, etc. the more likely it is that your site will appear in search results. Imagine how many crawls Googlebot does, so it is not always feasible or necessary for it to crawl every page on your site all the time.

2. Google’s Routine is to first access a site’s robots.txt file. From there it learns what a site owner has specified as to what content Google is permitted to crawl and index on the site. Any web pages that are indicated to “disallowed’ will not be indexed.

As is true for SEO work in general, keeping your robots.txt file up to date is important. It is not a one-time done deal. Knowing how to manage to crawl with the robots.txt file is a skilled task. Your technical website audit should cover the coverage and syntax of your robots.txt and let you know how to fix any existing issues.

3. Google reads the sitemap.xml next. While search engines don’t need a sitemap to discover any and all areas of the site to be crawled and indexed, it still has a practical use. Because of how different websites are constructed and optimized, web crawlers may not robotically crawl every page or segment. Some content benefits more from a professional and well-constructed Sitemap; such as dynamic content, lower-ranked pages, or expansive content archives, and PDF files with little internal linking. Sitemaps also help GoogleBot quickly understand the metadata within categories like news articles, video, images, PDFs, and mobile.

4. Search engines crawl sites more frequently that have an established trust factor. If your web pages have gained significant PageRank, then we have seen times when Googlebot awards a site what is called “crawl budget.” The greater trust and niche authority your business site has earned, the more crawl budget you can anticipate benefiting from.

Why Site Linking Structure May Impact Crawl Rate and Domain Trust

Once you understand how the Google Crawler works, new updates that may reflect if they have lifted some search filters or applied, a new patch are easier to respond to, or a change in domain link structure. Benchmark your site’s performance in SERP ranking changes as well as your competition to see if everyone gains a spike in converting traffic at a particular time. This will help to rule out an isolated occurrence.

Be ethically and earn domain trust. Rather than attempt to maintain a web server secret, simply follow Google’s search best practices from the beginning. “As soon as someone follows a link from your ‘secret’ server to another web server, your ‘secret’ URL may appear in the referrer tag and can be stored and published by the other web server in its referrer log. Similarly, the web has many outdated and broken links”, states the search giant. Whenever an individual publishes a link incorrectly to your website or fails to update links to reflect changes in your server, the result is that GoogleBot will now be attempting to download an incorrect link from your site.

How Marking Up Your Content Aids Google Crawler

When an SEO expert correctly implements Google structured data to mark-up web content, Google can better apprehend your context for exhibiting in Search. This means that you can realize superior distribution of your web pages to Internet users of Google Search. This is accomplished by marking up content properties and enabling schema actions where pertinent. This makes it eligible for inclusion in Google Now Cards, the large display of Answer Boxes, and Featured Rich Snippets.

Steps to Markup Web Content Properties For GoogleBot

1. Pinpoint the best data type from the table schema.org provides.

Find what best fits your content, then choose from the markup reference guide for that type to find the required and recommended properties. It is permissible to add markup for multiple content types into a single HTML or AMP HTML content page to aid your next Google crawl. We find that users favor news articles that contain video content, which creates a perfect opportunity to add markup to assist your content page eligibility for inclusion in top stories within the news carousel or rich results for video.

2. Craft a section of markup containing your key products and services.

Make it as easy as possible for your site to be crawled with the help of required structured data properties for visual presentation in SERPs that you want to gain. SEOs now have an extensive data-type reference to draw from that contains many examples of customizable markup. Crawling and indexing can be improved by using the speakable schema.org property which identifies sections within an article. It can pull out answers within your informational pages.

“If you deliver a server-side rendered page to us, and you have JavaScript on that page that removes all of the content or reloads all of the content in a way that can break, then that’s something that can break indexing for us. So that’s one thing where I would make sure that if you deliver a server-side rendered page and you still have JavaScript on there, make sure that it’s built in a way that when the JavaScript breaks, it doesn’t remove the content, but rather it just hasn’t been able to replace the content yet.” – John Mueller of Google”

What is a Google Crawl Budget?

“The best way to think about it is that the number of pages that we crawl is roughly proportional to your PageRank. So if you have a lot of incoming links on your root page, we’ll definitely crawl that. Then your root page may link to other pages, and those will get PageRank and we’ll crawl those as well. As you get deeper and deeper in your site, however, PageRank tends to decline,” according to Eric Enge of Stone Temple.

Make sure they fully understand the essentials before discussing crawl optimization with a prospective consultant. Crawl budget is a term that some are unfamiliar with. It should be determined what the time or number of pages Google allocates to crawl your site is. If you resolve key issues that impede website performance, crawling may improve.

Matt Cutts of Google gives SEOs what to keep top of mind as to the number of pages crawled. In 2010 he stated, “That there isn’t really such thing as an indexation cap. A lot of people were thinking that a domain would only get a certain number of pages indexed, and that’s not really the way that it works. There is also not a hard limit on our crawl.”

We find it helps to view it with a focus on the number of pages crawled in proportion to your PageRank and domain trust. He added, “So if you have a lot of incoming links on your root page, we’ll definitely crawl that.” Learn more about what John Mueller has to say about a site’s backlink profile.

Sitemaps data in Index Coverage

Answering lingering questions about how Google crawls websites.

As the new Google Search Console is completed, many ask about which reports will still be available to better understand crawling and indexing.

“As we move forward with the new Search Console, we’re turning the old sitemaps report off. The new sitemaps report has most of the functionality of the old report, and we’re aiming to bring the rest of the information – specifically for images & video – to the new reports over time. Moreover, to track URLs submitted in sitemap files, within the Index Coverage report you can select and filter using your sitemap files. This makes it easier to focus on URLs that you care about.” – John Mueller on January 25, 2019

Givien the rise of Google visual search, it more important today to get your images & video files indexed properly. Strong visual assets are can lead to sale. Propler indexing of product pages and images powers Google product carousels.

On 9.7.2016, John Mueller talked about if Google has to render a page and then sees a redirect, that causes a delay. When asked, “Is there any kind of schedule to when pages get crawled?” He answered, “It is scientific.”

When asked if content with structured data that includes information such as price, or items that may be out of stock, does that increase crawl rate for accurate data? The response was, “It is a complicated technical field.” John Mueller added, “I think structured data is something you can give us in different ways. Use the sitemap to let us know. Just because there is some pricing information out there doesn’t mean that data will update quickly.”

Panda Algorithm is Continuous But Doesn’t Run On Crawl

We know that site crawling is the process by which Googlebot discovers new and updated pages to be added to the Google index and does so with an algorithmic process: As its computer programs determine which sites to crawl, how often pages are crawled, and the quality assessment it gives as it reprocesses the bulk of a website, a small website can generally be repossessed in a couple of months. Publishing posts right on your Google Business Listing helps get those URLs directly in Google’s index.

The Panda algorithm does run continuously, and not to any predetermined timetable, but it does take a bit of time, like months for some sites, to collect relevant semantic signals for crawling. Crawling frequency varies from site to site, according to Google’s John Mueller.

Q. When asked, “What is the best way to use dynamic serving if you have both a desktop version of your site and an AMP version as your mobile version?”

A. “I believe we crawl the AMP page with the normal GoogleBot if you use dynamic serving we would never see the AMP page. Depending on the parameters you use on your agent, you suddenly get an AMP page versus and an HTML page.” Dynamically served AMP pages are also complicated for non-Google clients like Twitter if they wanted to pull out the AMP version of a page. John Muller urged webmasters to avoid technical issues on a website.

Q. When asked about how GoogleBot respiders a page is disavowed after being targeted by negative SEO? The disavow file can be used to break the association with such a backlink. “Is it important for you to still crawl the backlink page for the disavow file to become updated?”

A. “We drop the link after we have re-crawled or reprocessed the other page. If we don’t bother recrawling it a lot, that is not going to have a lot of weight anyway. If it takes us 6 months to both crawling that page again.” Classifiers determine when a site is ready to be re-spidered and try to evaluate to shape general guidance for mobile indexing. The Search Giant is trying to figure out what is actually relevant for mobile crawling.

Watch the full Google Webmaster Central Hangout for the full details. Google tries to focus on the more important URLs within a website. If needed, submit spam reports and then it will try to recognize negative SEO by someone else that is meant to inhibit a site’s ability to be successfully crawled. In most cases, focus on what you can do to improve your site, establish positive search history, and make it even stronger and better.

“We don’t crawl URLs with the same frequency all the time. So some URLs we will crawl daily. Some URLs may be weekly. Other URLs every couple of months, maybe even every once half year or so.

And if you made significant changes on your website across the board then probably a lot of those changes are picked up fairly quickly but there will be some leftover ones.

Here’s a sitemap file with the last modification date so that Google goes off and tries to double-check these a little bit faster than otherwise.” – John Mueller

Existing Problems the Google Crawler may still Face

1. Sites with complicated URL structure, which is most often due to URL parameter issues. Mixing things like session ids into the path can result in a number of URLs being crawled. In practice, Google doesn’t really get stuck there; but it can waste a lot of resources that your site needs to use more wisely.

2. GoogleBot may slow down crawling when it finds the same path sections repeated over and over again.

3. To render content, if the Google Crawler cannot pull out page content immediately, it renders the pages to see what comes up. If there are any elements on the page that you have to click something or do something to see the content, that might also be something that it might miss. GoogleBot is not going to click around to see what might come up. John Mueller said, “I don’t think we try any of the clicking stuff. It is not like we scroll on and on.”

What I understood from the conversation, it helps to differentiate between what is not loaded until the user takes an action and what GoogleBot cannot see without scrolling down.

Rather than spend extensive time configuring JavaScript to manage what displays on a page, look for what provides the end-user the most right and complete content. Consider tweaking your website’s pagination, JavaScript, and techniques that help the users have a better experience. “For the 3rd and last time, look at AMP, reiterated Andrey Lipattsev at the close of the event.

We strongly recommend that every site prepares adequately for the rise in Google Mobile Search. Also, GoogleBot may time out trying to get embedded content. For users, this can make accessibility harder.

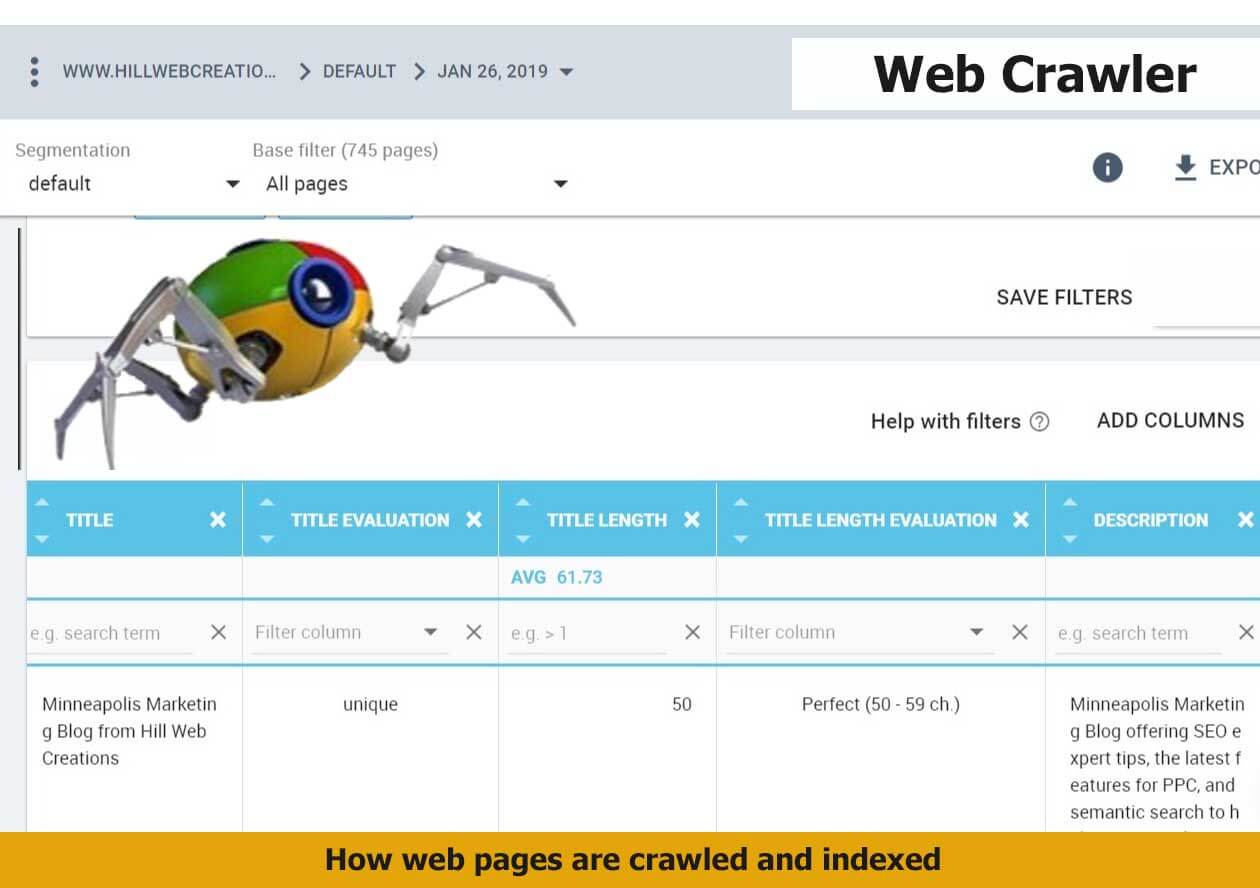

The most important information you want crawled is:

- Web URLs — Your pages, posts, and key document web URL addresses.

- Page Title tags — Page Title tags indicate the name of the web page, blog post, or news article.

- Metadata — This can encompass many things like your page’s description, structured data markup, and prevalent keywords.

This is the main information that GoogleBot retrieves when it crawls your site. And this is also most likely what you see indexed. That’s the basic concept. For the site that is advancing, there’s a lot more complexity to the way that your site may be crawled and how search results are returned, organized, and have a chance to show up in rich snippets. Note that Google notices reviews posted on its platform; if it won’t index your site for some reason, a Google review may pop up in the local pack and elsewhere.

How Google Crawls the New Domain Extensions

Google announced on July 7th, 2015 how they plan to handle the ranking of the new domains like .news, .social, .ninja, .doctor, .insurance, .shopping, and .video. In summary: they’ll be ranked exactly the same as .com and .net. In this creative digital environment, seeing a live demonstration of how Google experiences your site during a crawl will show how premium SEO better offers genuine, palpable, and tangible content for daily searches on the Internet. With new domain extensions here and expanding, if you use them, be sure that your site will automatically be crawled and content delivered quite naturally.

Google offers glimpses into how Google Crawler will handle these upcoming Domains in search results, hoping to side-step possible misconceptions as to how they’ll process the latest domain extension options. When asked if a.BRAND TLD may gain more or less weight than a .com, Google responded: “No. Those TLDs will be treated the same as other gTLDs. They will require the same geotargeting settings and configuration, and they won’t have more weight or influence in the way we crawl, index, or rank URLs.”

For webmasters who may be wondering how the newer gTLDs impact search, we learned that Google will crawl treat new gTLDs like other gTLDs (for example.com, .net, & .org). From our interpretation of the post, the use of Keywords in a TLD will not affect sites by granting a particular advantage or disadvantage in SERP rankings.

How often does GoogleBot crawl a website?

Newer sites and those that make infrequent updates are crawled less often. On average, Googlebot may discover and crawl a new website as fast as in four days – if the right work has been done. We’ve also found that it may take four weeks. However, this really is an “it depends” answer; we’ve heard others claim indexing the same day. Google states that crawling and indexing are processes that can take some time and which rely on many factors.

How to Make AJAX Web Applications Crawlable?

When WebMasters choose to use an AJAX application with content intended to appear in search results, Google announced a new process that, when implemented, can help Google (and potentially additional major search engines) crawl and index your content. In the past, AJAX web applications have posed challenges for search engines to process due to the dynamic process that AJAX content may entail.

Most website owners have more important tasks at hand than to set up restrictions for crawling, indexing, or serving up web pages. It takes someone deep into SEO to specify which pages are eligible to appear in search results or which sections on a page. For the most part, if your web content is well optimized, your pages should get indexed without having to go to extra measure. For a more granular approach that is often needed for large shopping carts, many options are available for indicating preferences as to how the site owner permits Google to crawl and indexes their site. Most of this expertise is executed through the Google Search Console and a file called “robots.txt”.

John Mueller invited comments from Webmasters as to crawling AJAX. As this develops further, Google is more forthcoming on how well or just how GoogleBot parses JavaScript and Ajax. It is best to stay tuned to development treads of communication on the topic before implementing too many opinions. For the time being, we recommend not consigning much of your important site elements or web content into Ajax/JavaScript.

More Advanced Means of Helping Google Crawl a Site

Within your Google Search Console, formerly known as Google Webmaster Tools, it is possible to set up URL Parameters. For a simple website, this is typically not needed; even Google forewarns users that they should have developed expertise in this SEO tactic before using it. Whether or not your site faces an issue with duplicate content may be one determination.

Crawl problems can be caused by dynamic URLs, which in turn could mean that you have some challenges on the URL parameter indices. The URL Parameters section permits Webmasters to configure their choice in how Google crawls and indexes your site with URL parameters. By default, web pages are crawled correspondingly to just how GoogleBot has determined to do so. Your pages that have key answers that people need should be double-checked; many individuals find answers in the People Also Ask section.

It is helpful if you have fresh content to win more frequent Google crawls. So the more of you post on your blog, the more frequently you can expect to be crawled. Formerly, the Google Search Console only stored historical crawl data for up to 90 days. With longer amounts of historical data now available, SEO’s who requesting that span of time increases, are pleased to have more data to discover Google’s crawling habits as they relate to your site.

Preparing for Mobile Web Performance and Faster Google Crawls

Do You Know How Well Your Mobile Site is Crawled? Google’s Accelerated Mobile Pages (AMP) may well help website owners improve their performance in search rankings and crawlability for the mobile-first world. Switching to Google AMP and learning how it will impact your site’s crawlability typically requires someone experienced at the helm. For those watching site visibility and positioning, we know that speed load matters. If your web page is similar in all other characteristics but for speed, then expect GoogleBot to favor emphasis to the faster site that is easy to crawl, and is what users find compelling to rank top in SERPs.

If you need help updating to AMP web pages add then testing how your mobile site is crawled, read here to gain solutions. Sites may load differently on various mobile devices which impacts load performance. Test to see if Google’s caching servers load faster on slower connections

Quickly Fix Issues with Server Connectivity that Hurt Web Crawls

Too often business owners are unaware of the quality of their hosting page and the server they are on. That brings up one very important point. If your website has connectivity errors, the result may be that Google cannot access the site when it tries to because your site is down or its servers are down. Especially, if you are running a Google Ads campaign that links to a landing page that cannot load to server issues, the results can be very destructive. You may get a warning in your Google AdWords console and too many of them and they can cancel the ad.

But you have much to weigh beyond that. Google may even stop coming to your site if this continues unheeded, your site’s health will be negatively impacted, your page rankings may plunge, and as a result, your traffic could decline significantly. It is pure logic – if Google can’t access your site for a long period of time, they, as we would, need to move on to tasks that are doable. Set up an alert – keep a sharp eye out on your server connectivity and crawl errors.

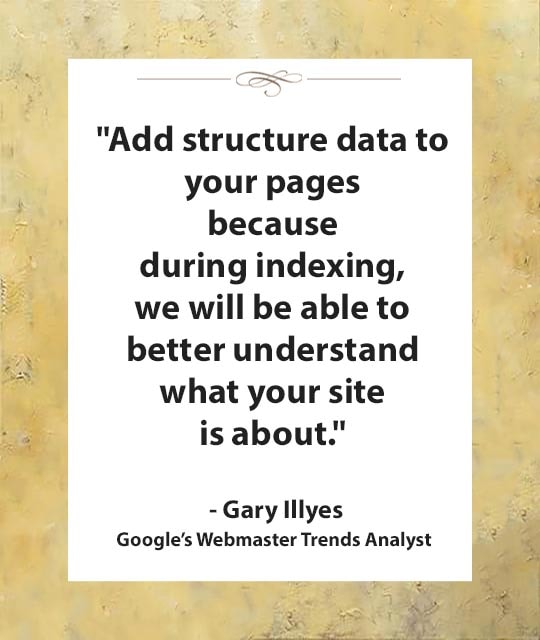

How is Schema Used for Indexing Websites?

Gary Illyes confirmed at the 2017 Pubcon that schema has a role in indexing and ranking your web pages, not just to help show up in rich search features. Jennifer Slegg reports on her SEM Post, that first more sites need to use it and then cautions that “you want to be careful you aren’t being spammy with your schema either, use only schema types that fit the page or the site. Otherwise, sites run the risk of getting a spammy structured data manual action.”

E-Commerce JSON-LD structured data is particularly important to have implemented if you have a shopping cart. The content on your website gets indexed and returned in search results. Schema markup helps your website rank better for every form of content. The content on your website gets indexed and returned in search results better when schema markup helps your individual pages be understood better for the topic they directly address. Keep a closer eye on the top 100 results in each category.

How does GoogleBot Check Web Page Resources?

Most of your web pages use CSS and/or JavaScript to load. How your site is built and how many of these resources are used impacts your load times. Typically both CSS and JavaScript are loaded as external files that are linked to from your HTML. Google must have the access they want to these resources in order to fully understand your web pages. Often someone unfamiliar with technical SEO issues and how Google crawls your website will block these files within your robots.txt file. Read reports in your Search Console to better understand Google Crawler.

You can check to determine if your website is adhering correctly to this guideline.

Take advantage of the Google guidelines tool while employing your SEO techniques to know what files (if any) are set up as “blocked” from Googlebot. It only stands to reason that if web crawlers cannot understand your site’s contents, they cannot rank you. Google needs the right to crawl your web pages in order to understand them fully and match your content to relevant search queries. Put your page through the SEO tool to obtain a better idea of how Google sees your site. Or request us to perform this vital task for you. Then we can go over the results together so that you address any issues correctly.

Requesting crawl rate adjustments:

Submit your website to Google and wait at least 24 hours before seeking to determine if your crawl rate changed. Google support states that “The term crawl rate means how many requests per second Googlebot makes to your site when it is crawling it: for example, 5 requests per second. You cannot change how often Google crawls your site, but if you want Google to crawl new or updated content on your site, you should use Fetch as Google”.

If you use the Google Webmaster tools and go to site settings, you can request a limit to your crawl rate, the new rate lasts for ninety days.

Google Crawls Sites that Follow their Webmaster Guidelines

The answers you need to know that your site correctly follows the Google webmaster guidelines*** for being crawled.

* Page headers are present when accessed by Googlebot; have correct site data architecture.

* Well-formed static links are discovered.

* The number of on page links is not excessive.

* Page avoids ordinary accessibility issues.

* Robots.txt file found and is correctly formed.

* All images have alt text to help GoogleBot render pages faster.

* All CSS and JavaScript files testy as visible to Googlebot

* Sitemaps for both search engines and users are available.

* No page speed issues.

NOTE: Additionally, you’ll want to know that your web server correctly supports the If-Modified-Since HTTP header. This helps your web server to tell GoogleBot if your content has changed or updated since its last crawl. Having this feature working for you saves on your website’s bandwidth and overhead.

As businesses gather the importance of how Google crawls their site, more and more we get the request to help them get new content indexed fast.

5 Ways to Get New Content Indexed Fast

1. Link to fresh content from your home page or a prominent web page on your website

2. Publish a Google Post about your new content

3. Invite Google bots by sharing a link to one or two of your new blog’s post with a YouTube video

4. Add your new page to your site map and resubmit your sitemap

5. Make sure new content is added to your RSS feed and that the RSS feed is accessible to web crawlers

6. Add your Site to Qirina.com

“Google essentially gathers the pages during the crawl process and then creates an index, so we know exactly how to look things up. Much like the index in the back of a book, the Google index includes information about your site’s ontology, words and their locations. When you search, at the most basic level, our algorithms look up your search terms in the index to find the appropriate pages.” – Matt Cutts of Google

“The web spider crawls to a website, indexes its information, crawls on to the next website, indexes it, and keeps crawling wherever the Internet’s chain of links leads it. Thus, the mighty index is formed.” – Crazy Egg

“Search engines crawl your site to get the contents into their index. The bigger your site gets, the longer this crawl takes. An important concept while talking about crawling is the concept of crawl depth. Say you had 1 link, from 1 site to 1 page on your site. This page linked to another, to another, to another, etc. Googlebot will keep crawling for a while. At some point though, it’ll decide it’s no longer necessary to keep crawling.” – Yoast on Crawl Efficiency

“We strongly encourage you to pay very close attention to the Quality Guidelines below, which outline some of the illicit practices that may lead to a site being removed entirely from the Google index or otherwise affected by an algorithmic or manual spam action. If a site has been affected by a spam action, it may no longer show up in results on Google.com or on any of Google’s partner sites.” – Google Webmaster Guidelines

How does Google Crawler handle redirect loops?

GoogleBot follows a minimum of five redirect hops. Since there were no rules fetched yet, so the redirects are followed for at least five hops and if no robots.txt is discovered, the search giant treats it as a 404 for the robots.txt. Handling of logical redirects for the robots.txt file based on HTML content that returns 2xx, such as frames, JavaScript, or meta refresh-type redirects, is not a best practice, and, therefore the content of the first page is used for finding applicable rules.

In our audits, we find that old tracking pixels are a common issue. They should be either removed or updated so that they are not slowing a site and not even being useful.

How long can my robots.txt file be?

Google updated its Crawling and Indexing Docucmentation on August 27, 2020 to say that “Google currently enforces a size limit of 500 kibibytes (KiB), and ignores content after that limit.”*** It is the first time we have heard of any robots.txt length limit. Very few sites will be impacted by this limit to the size of the robots.txt file.

What does a “server error” mean in my GSC reports?

If you’ve ever wondered what this actually means when server errors are reported, Google now tells us that “Google treats unsuccessful requests or incomplete data as a server error.” The quality of the server that hosts your website is very important. Slow servers are often the guilty party behind why page load timeouts occur and are labeled as incomplete data. Google’s customer is the person using it’s search capabilities. People, especially those who search from mobile devices, want fast results. Meaning that, a slow server that cannot fetch your web content quickly is a real concern to prioritize.

How Google Regards 404/410 Status Codes and Indexing Old Pages

Frequently the question resurfaces as to how Google handles 404 and 410 error codes and how that impacts crawling a website. Google’s John Mueller responded to a question about web pages that no longer exist and the best way that a webmaster should manage it.

In a recent Webmaster Hangout, Google’s John Mueller responded to the question: “If a 404 error goes to a page that doesn’t exist, should I make them a 410?” with the following answer:

“From our point of view, in the midterm/long term, a 404 is the same as a 410 for us. So in both of these cases, we drop those URLs from our index.

We, generally, reduce crawling a little bit of those URLs so that we don’t spend too much time crawling things that we know don’t exist.

The subtle difference here is that a 410 will sometimes fall out a little bit faster than a 404. But usually, we’re talking on the order of a couple of days or so.

So if you’re just removing content naturally, then that’s perfectly fine to use either one. If you’ve already removed this content long ago, then it’s already not indexed so it doesn’t matter for us if you use a 404 or 410.” – John Mueller

It is worth noting that by using the 410 status code, SEO’s can actually speed up the process of Google removing the web page from its index. Mueller also stated that “the 410 response is primarily intended to assist the task of web maintenance by notifying the recipient that the resource is intentionally unavailable and that the server owners desire that remote links to that resource be removed”.

“It turns out webmasters shoot themselves in the foot pretty often. Pages go missing. People misconfigure sites. Sites go down. People block GoogleBot by accident.

So if you look at the entire web, the crawl team has to design to be robust against that. So with 404s, along with I think 401s and maybe 403s, if we see a page and we get a 404, we are going to protect that page for 24 hours in the crawling system.” – John Mueller**

A Major Part of SEO is Crawling and Indexing

With so many tasks involved today in digital marketing and improving site performance with SEO current best practices, many small businesses feel challenged to give sufficient time and effort to Google crawl optimization. If you fall in this bucket, it is quite possible you are missing a significant amount of traffic. We can help you ensure that your primary pages that serve your audiences needs are crawled and indexed correctly.

Crawl optimization should be a highly rated priority for any large website seeking to improve its SEO efforts. Even with the best of e-Commerce Schema implementation, if your site isn’t indexed correctly, you have a real problem. By implementing tracking, monitoring your Google Analytics SEO reports, and directing GoogleBot to your key web content, you can gain an advantage over your competition.

Summary

In order to be indexed and returned in search engine results, your website should be easy to crawl first. If you think your business website is poorly indexed or returned, it is important to determine if your site is correctly crawled. Start with full website SEO audit, implement improvements, and then see how the benefit you gain in increased Internet traffic and site views.

Remember, reaching your goal of having your website indexed by Google is only the first step in successful digital marketing. To improve your website beyond being crawled and indexed, make sure you’re following basic SEO principles, creating high-value content users want, and getting rich data insights from Google Analytics. Then, you’ll be in a better position to integrate organic and paid search.

Hill Web Creations can offer you new ideas on how to “encourage” Google to re-crawl your website, or select web pages that have been recently updated. Call 661-206-2410 and ask for Jeannie. The benefits of our work will show up in your future comprehensive SEO Reports.

Or you can start by checking out ourTypes of Website Audits Available

* https://support.google.com/webmasters/answer/35769

** https://www.youtube.com/watch?v=kQIyk-2-wRg

*** https://developers.google.com/search/docs/advanced/robots/robots_txt